Joint 3D Reconstruction of Semantic and Geometry

Tsinghua University

Advisor: Yongjin Liu, Professor at Department of Computer Science and Technology, Tsinghua University

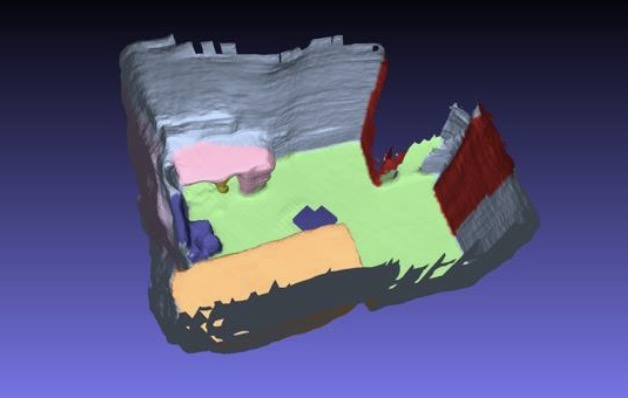

Built an end-to-end network that jointly reconstructs 3D geometry and semantics under multi-view settings. We came up with a new coarse-to-fine method that iteratively optimizes the ray depth prediction and the 3D feature space, as predicted ray depths could pose constraints to 2D-to-3D feature projection and more precise 3D feature spaces can be processed into more accurate ray depths. Using this two-way approach, we were able to explicitly fuse visual and semantic information and finally came up with more accurate reconstruction results than previous models.

- Investigated the combination of 3D geometry reconstruction and semantic labeling of 3D voxels.

- Introduced the multi-view feature correlation to achieve robust 2D to 3D feature fusion under occlusion, mitigating the feature ambiguity problem introduced by feature averaging in previous works.

- Proposed a two-branch 3D voxel network to jointly reconstruct and label the voxels.

- Achieved smooth and accurate 3D reconstruction and semantic labeling.

This is a reconstruction example with geometric mesh and also semantic labels represented by different colors.